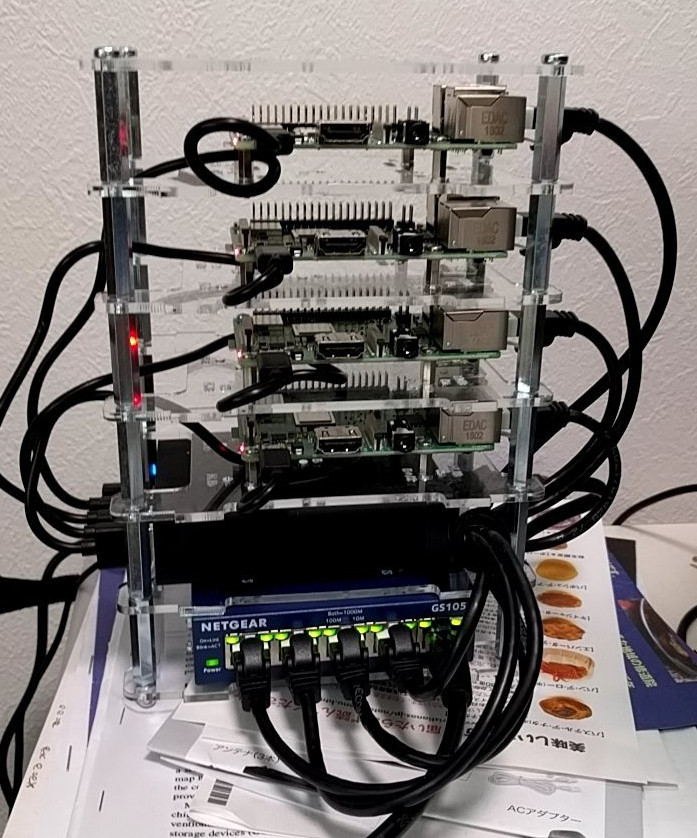

Set up kubernetes on a raspberry pi cluster running raspbian

I wanted to play around with OpenFaas and could generally use a local kubernetes setup that is not minikube but an actual cluster.

There are a couple of nice guides from the OpenFaas community and I am mostly following their steps.

Where I ran into trouble though was the setup of the k8 dashboard - all guides i found were either outdated or missing information. I had to piece together things and got it to work. I kept my notes and also put my script into a gist as things in k8 land are moving so fast your links to external docs can quickly die or not reflect what you saw when you did it yourself.

DHCP setup

You don't want your cluster nodes IPs to be changing. Set up fixed IPs for your nodes on your router. If you have no local DHCP available you need to use the original version of the make-rpi.sh script that also sets up a DHCP server. See below.

In my case i set up the IPs 192.168.42.[40~43] for my nodes .40 being the master node.

Provision base system

Get a Raspbian Stretch Lite image from here.

I used 2018-11-13-raspbian-stretch-lite.img

"Burn" that image onto an SD card using dd or if you prefer a GUI use etcher.

Modify the base system

This is a modified version of this script - I use a separate DHCP server and did not want to have it run on the pi. Everything else is the same.

The script will create two mount points: /mnt/rpi/root and /mnt/rpi/boot. Make sure there is nothing in the way.

Put your ssh pub key into a file template-authorized_keys in the same directory as the make-rpi.sh script.

export DEV=sda export IMAGE=2018-11-13-raspbian-stretch-lite.img

DEV should be the SD cards device and IMAGE the name of the raspbian image. Edit if necessary.

sudo ./make-rpi.sh pikube-master sudo ./make-rpi.sh pikube-node-0 sudo ./make-rpi.sh pikube-node-1 sudo ./make-rpi.sh pikube-node-2

to modify the four SD cards/images

Then boot the system on all pis.

Install docker

On all nodes

curl -sLS https://get.docker.com | sudo sh

sudo usermod -aG docker pi

Prepare k8s installation

Do the following on all nodes:

Disable swap on all nodes

sudo dphys-swapfile swapoff && \ sudo dphys-swapfile uninstall && \ sudo update-rc.d dphys-swapfile remove

This should show no entries

sudo swapon --summary

Edit /boot/cmdline.txt

sudo nano /boot/cmdline.txt

Add this text at the end of the line, but don't create any new lines:

cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memory

Now reboot all nodes (do not skip this)

sudo reboot

Install kubernetes

Setup the package manager and install kubeadm

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - && \ echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list && \ sudo apt-get update -q && \ sudo apt-get install -qy kubeadm

On master

sudo kubeadm config images pull -v3

And init master

sudo kubeadm init --token-ttl=0 ... Your Kubernetes master has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of machines by running the following on each node as root: kubeadm join 192.168.42.40:6443 --token 80zx1c.1kz4g133riual7qf --discovery-token-ca-cert-hash sha256:804d2fa237ddbacf125b6b350d8a4a9b33bca4338bddad567f40a1268a4dd94d

Install weave net driver

kubectl apply -f \ "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" pi@pikube-master:~ $ kubectl get pods --namespace=kube-system NAME READY STATUS RESTARTS AGE coredns-86c58d9df4-h8rwq 0/1 Pending 0 5m56s coredns-86c58d9df4-pwhpq 0/1 Pending 0 5m56s etcd-pikube-master 1/1 Running 0 5m57s kube-apiserver-pikube-master 1/1 Running 0 4m57s kube-controller-manager-pikube-master 1/1 Running 0 6m2s kube-proxy-g59t9 1/1 Running 0 5m56s kube-scheduler-pikube-master 1/1 Running 0 4m51s weave-net-rw2nz 0/2 ContainerCreating 0 27s

eventually

pi@pikube-master:~ $ kubectl get pods --namespace=kube-system NAME READY STATUS RESTARTS AGE coredns-86c58d9df4-h8rwq 1/1 Running 0 7m9s coredns-86c58d9df4-pwhpq 1/1 Running 0 7m9s etcd-pikube-master 1/1 Running 0 7m10s kube-apiserver-pikube-master 1/1 Running 0 6m10s kube-controller-manager-pikube-master 1/1 Running 0 7m15s kube-proxy-g59t9 1/1 Running 0 7m9s kube-scheduler-pikube-master 1/1 Running 0 6m4s weave-net-rw2nz 2/2 Running 0 100s

Join nodes

This has to happen within 24 hours - the token expires. And apparently it's a pain in the butt to get a new token, so make sure you do this right away...

sudo kubeadm config images pull -v3 sudo kubeadm join 192.168.42.40:6443 --token 80zx1c.1kz4g133riual7qf --discovery-token-ca-cert-hash sha256:804d2fa237ddbacf125b6b350d8a4a9b33bca4338bddad567f40a1268a4dd94d

Checking on master

pi@pikube-master:~ $ kubectl get nodes NAME STATUS ROLES AGE VERSION pikube-master Ready master 12m v1.13.2 pikube-node-0 NotReady <none> 13s v1.13.2

and enventually

pi@pikube-master:~ $ kubectl get nodes NAME STATUS ROLES AGE VERSION pikube-master Ready master 13m v1.13.2 pikube-node-0 Ready <none> 78s v1.13.2

Joining the other nodes

pi@pikube-master:~ $ kubectl get nodes NAME STATUS ROLES AGE VERSION pikube-master Ready master 16m v1.13.2 pikube-node-0 Ready <none> 4m7s v1.13.2 pikube-node-1 NotReady <none> 2s v1.13.2 pikube-node-2 NotReady <none> 1s v1.13.2

and then

pi@pikube-master:~ $ kubectl get nodes NAME STATUS ROLES AGE VERSION pikube-master Ready master 19m v1.13.2 pikube-node-0 Ready <none> 6m24s v1.13.2 pikube-node-1 Ready <none> 2m19s v1.13.2 pikube-node-2 Ready <none> 2m18s v1.13.2

Do not auto-update k8 packages

sudo apt-mark hold kubelet kubeadm kubectl

Get the dashboard running

create a role (this has admin privs - dont do this on prod)

Not 100% sure this is needed. This was in a couple of other guides that were older and ended up not working for me in the end.

echo -n 'apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard labels: k8s-app: kubernetes-dashboard roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system' | kubectl apply -f -

Deploy the dashboard

I kept a gist copy of the yaml file below here.

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended/kubernetes-dashboard-arm.yaml kubectl apply -f ./kubernetes-dashboard-arm.yaml

pi@pikube-master:~ $ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-86c58d9df4-h8rwq 1/1 Running 1 23h kube-system coredns-86c58d9df4-pwhpq 1/1 Running 1 23h kube-system etcd-pikube-master 1/1 Running 1 23h kube-system kube-apiserver-pikube-master 1/1 Running 2 23h kube-system kube-controller-manager-pikube-master 1/1 Running 1 23h kube-system kube-proxy-g59t9 1/1 Running 1 23h kube-system kube-proxy-ngv8h 1/1 Running 1 23h kube-system kube-proxy-ngx7l 1/1 Running 1 23h kube-system kube-proxy-ws7xb 1/1 Running 1 23h kube-system kube-scheduler-pikube-master 1/1 Running 1 23h kube-system kubernetes-dashboard-56bcddb89b-rjzdk 0/1 ContainerCreating 0 24s kube-system weave-net-dk8np 2/2 Running 5 23h kube-system weave-net-r92kj 2/2 Running 5 23h kube-system weave-net-rw2nz 2/2 Running 5 23h kube-system weave-net-wxhsz 2/2 Running 4 23h

Either start the proxy on master and then tunnel to it

kubectl proxy ssh -N -L 8001:localhost:8001 pi@pikube-master

Or (better) set up your cluster in your local ~/.kube/config (copy the stuff from ~/.kube/config on master) and then locally do

kubectl proxy

You can then access the dashboard (in both cases) here:

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

To log in you need to create a user/token:

See also: https://github.com/kubernetes/dashboard/wiki/Creating-sample-user

echo -n 'apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kube-system' | kubectl apply -f - echo -n 'apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kube-system' | kubectl apply -f -

pi@pikube-master:~ $ kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print$1}') Name: admin-user-token-trlxs Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 65a7d5be-1e52-11e9-91c0-b827eb3539fa Type: kubernetes.io/service-account-token Data ==== namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXRybHhzIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2NWE3ZDViZS0xZTUyLTExZTktOTFjMC1iODI3ZWIzNTM5ZmEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.fbaUgCE-x_bfiTphiLuUPEH8v_v-eELn5uEVQndIPG6wt7vfULCDNS3757LUWRR-AAkhZDk-cb0PeXXUse7WPVuN8ZAVXRv-N-IqU_uZEaKtUU-saCUx9T0BikLFyvh9gIDmNAywF-librhRIJpdXPe_O9JPy5TXzcZwnWn5CVsKKXiGZ4yqWcSUUECtmybvfluBOS9gTHvylzVxRdiz0a9zMwNNIWUKNQOo-Pldx8npKMvh-OvQj4HfR2bSgAzu7-anWfQrQ1Wlm-lu8zM0U-F-J797KnU8CAqOHm571qt9t5lBa4V4rGspAWRqwWlQNyYIofr9yLvuo4Q3SUjDXQ ca.crt: 1025 bytes

Login with a token using the secret from above

Done.

Helpful Links

- https://gist.github.com/alexellis/a7b6c8499d9e598a285669596e9cdfa2

- https://github.com/alexellis/k8s-on-raspbian

- https://github.com/alexellis/k8s-on-raspbian/blob/master/GUIDE.md

- https://github.com/kubernetes/dashboard/wiki/Access-control

- https://kubernetes.io/docs/reference/kubectl/cheatsheet/

- https://github.com/kubernetes/dashboard